On May 10-12, 2022, Google hosted its annual I/O conference and announced several game-changing capabilities for mobile marketers, UA managers, and ASO folks.

For avid followers of Vaimolix’s research team, this comes as no surprise. After iOS 15 was announced, we predicted that Google Play would close the gap with the App Store in terms of marketing capabilities.

But Google Play didn’t just call the changes made on the iOS side, it also upped the ante.

35 Custom Product Pages you say? How about up to 50 Custom Store Listings.

In-App Events you say? Let’s take a look at LiveOps cards for all.

Product Page Optimization A/B testing? Here are more updates for Google Store Listing Experiments to give you even more control over your a/b tests.

From the archives:

In a nutshell, Google leveled the playing field. Many smart mobile marketing teams are already leveraging the iOS App Store marketing and ASO capabilities by better identifying, segmenting, and acquiring new users through creating personalized App Store product pages for each of their valuable audience segments.

This craft just became way more valuable as it can be applied to Google Play as well. Let’s dive into the announcements.

1. Custom Store Listing: Move Aside Apple, We call and Raise you

So first, Google Play announced that you will be able to create up to 50 Custom Store Listings (CSLs) and get a dedicated URL that you can use to drive traffic straight to them.

These CSLs are very similar to Custom Product Pages (CPPs) in terms of providing customization options for creatives, text elements, and localization.

With dedicated analytics and metrics for each CSL, you can analyze its performance, the uplift achieved on top of your default Store Listing, and even measure the revenue impact of specific campaigns that you’re driving to specific CSLs (more about it here – The 2022 iOS Custom Product Pages Playbook).

One key aspect that is different here, is that Google owns a much more significant ad network than Apple Search Ads. It owns Google Ads, the very popular UAC (universal app campaigns) product, and can easily integrate CSLs to Google Ads.

We can see hints for that in two places. First, a screenshot that was shared on a blog post on the Google Play Android Developer blog.

Where it says Target users by Country, URL OR By Google Ads Campaign.

Reports from the thriving ASO Stack Community supported by our independent research are that this feature isn’t yet rolled out to most developers. However, we estimate it’ll arrive in due time, which means that one of the most important traffic sources for Google Play apps will support this feature, and you’ll be able to adopt it freely.

As a side note, Google Experiments will not support running tests on Custom Store Listings that target users by a URL.

2. LiveOps: The move to an event-driven store continues

Similar to In-App Events, Google is expanding its LiveOps product to more developers (intending to release it all soon) which closely resembles the In-App Event feature you can find on the App Store side.

This feature will allow you to add in different events, embed a deep link within the event, and get the massive benefit of getting more visibility across the Play Store for new and returning users.

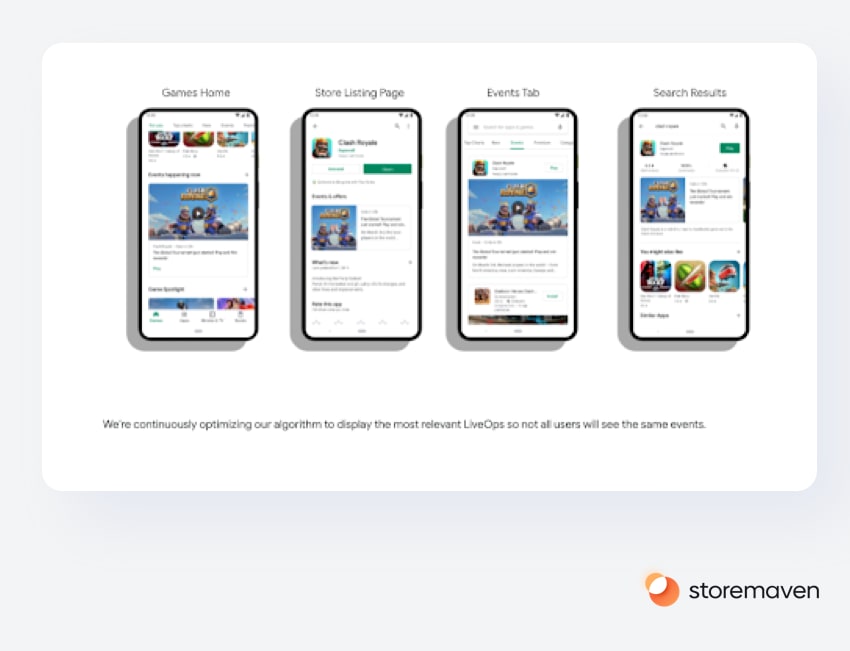

LiveOps cards will appear in the Games Home tab, the Events Tab, within Search Results (will probably have metadata indexed similar to the App Store), and on the Store Listing Page itself.

LiveOps cards will also include analytics, which will allow you to understand their impact, and again, measure the revenue impact of each event.

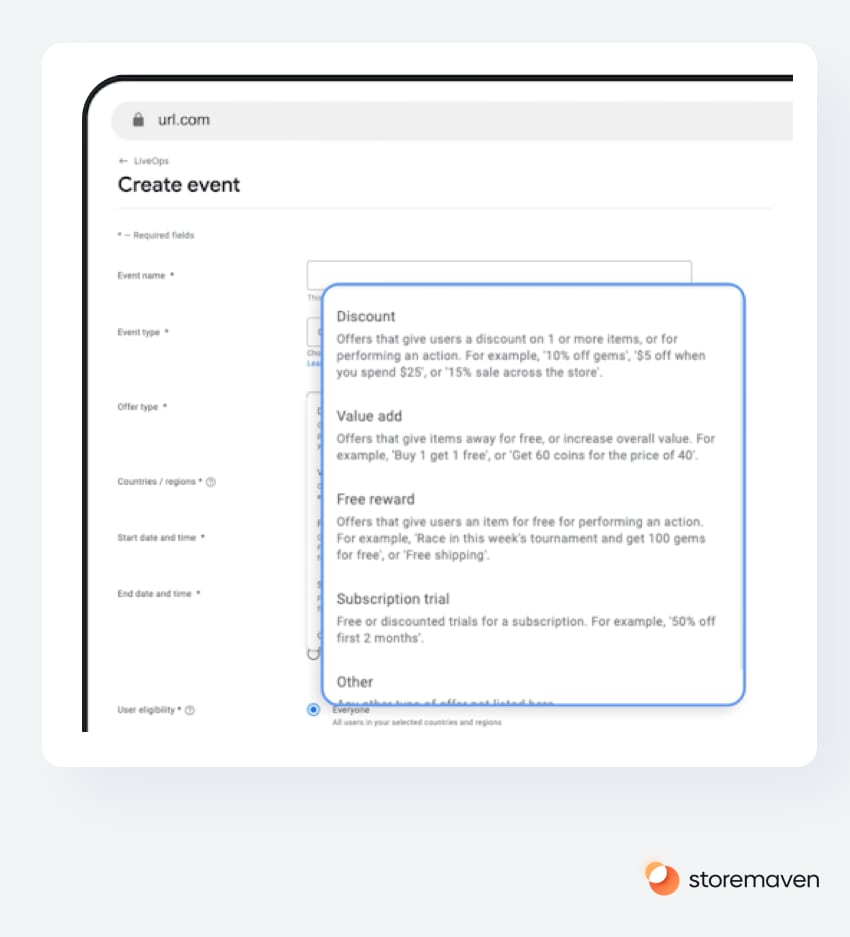

One really interesting and promising Event Type that is rolled out globally is Offers, which includes discounts, value-add offers, free rewards, and subscription trials which can be extremely impactful in terms of appeal and conversion rates.

3. Google Play Store Listing Experiments: New Power, New Responsibility

Out of beta, the new Google Play Store Listing Experiments represents a pretty significant change in the way Google views experiments.

First, Google has decided to move even deeper into the Frequentist statistics camp and away from Bayesian Statistics regarding Store Listing Experiments. This is a counterintuitive decision for many as Google, across many of the experimentation products that it offers for web experiences, is an avid supporter and even evangelist of Bayesian statistics.

What does it mean for you?

In the previous version of Google Play Store Listing Experiments, it collected data for each variation (how many installs it received), took into account the percentage of overall traffic it received, let’s say 50%, and then multiplied the actual data by the appropriate factor (in this case 2) to determine Scaled Installs. In other words, how many installs that variation would receive if it would get 100% of traffic.

The number of downloads and conversion rates are highly impacted by the amount of traffic (if you double traffic, you are very likely to not double installs as conversion rates would be lower as a result of a wider and larger audience).

This has resulted in a lot of mistrust in Google Experiments in the ASO community, as many tests produced results that didn’t hold up in real life, and sometimes even leading to a drop in conversion rates.

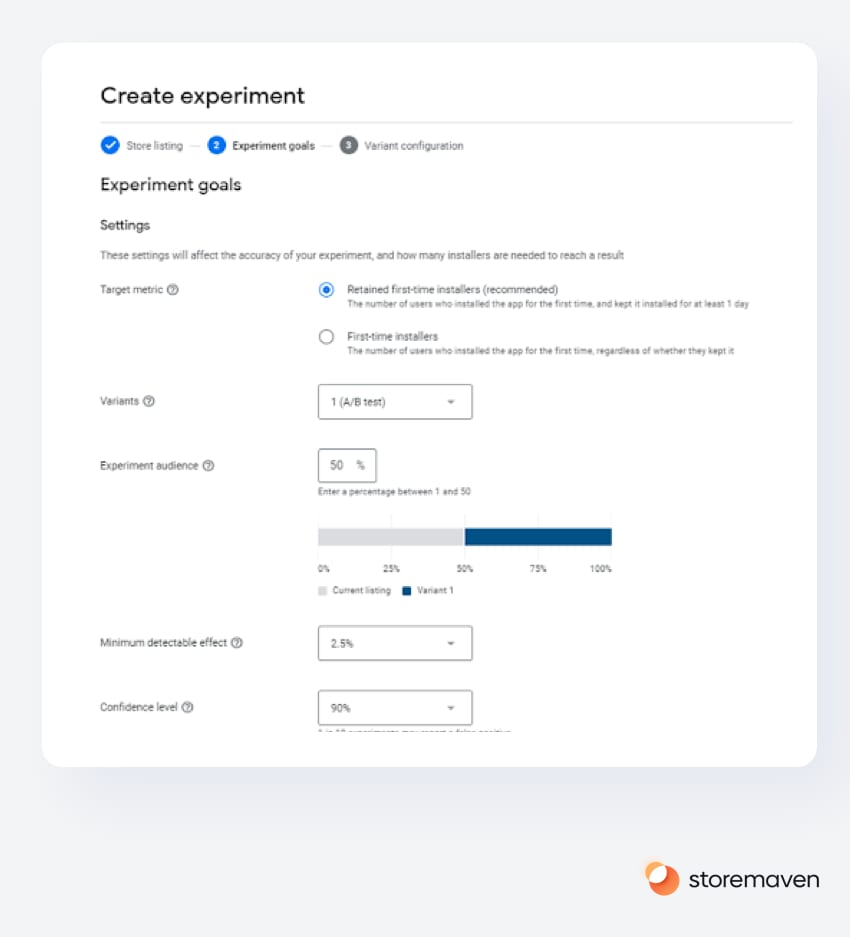

In the new Google Play experiments you need now, as part of the Google Experiment set up wizard, you need to configure your test by selecting several parameters:

- Minimum Detectable Effect (MDE) = a factor that determines the minimum “uplift” you want to accept as a threshold to declare a winner for the test (rejecting the null hypothesis which is “there is no meaningful difference in performance between the test variations). The higher this is, the less sample you would need for the test (as the test is less sensitive and would only detect a meaningful uplift, although there is more chance the test would conclude that no uplift was found).

- Confidence Level = probably the more known factor, which basically means the chance the result is not false, for a 90% confidence level the result you are receiving would be, for 1 out of 10 experiments, a false positive.

- Experiment goal – You now need to select between D-1 retained first time downloads, to first time download, as the metric you are using to measure the performance of each variation.

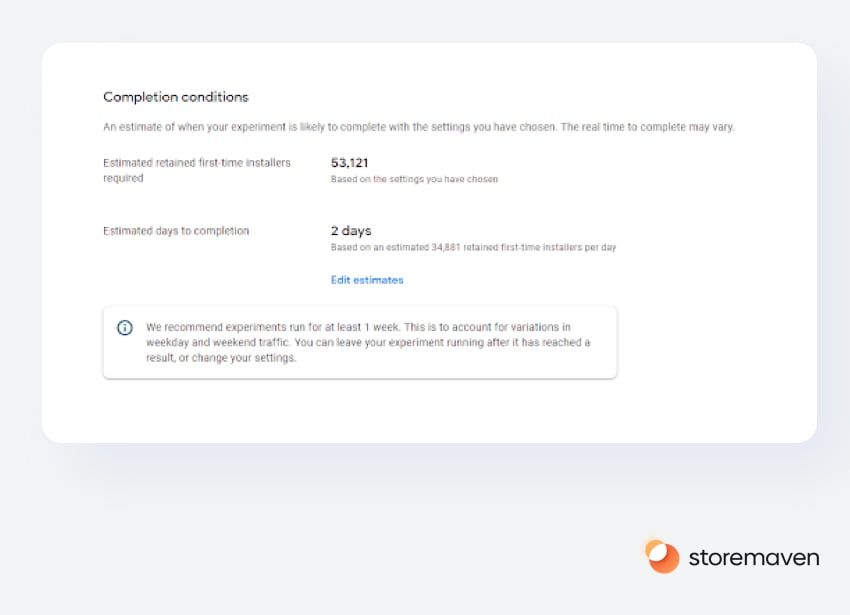

According to the test configuration, you will receive an estimate of how large the sample you need to conclude the test is, and based on your traffic volume, how long it would take to conclude the test.

So the bottom line for the new addition to Google Experiments are:

- This does give you more control and more data for each one of your Google Experiments. If you do invest the time to configure the test according to your unique app/game performance characteristics, you can run tests that should be more accurate than the previous Experiments version.

- The value from the previous point is not that easy to realize. Not every marketing team has ongoing access to great data scientists and statisticians to help with test configuration, and the result of not configuring test parameters properly will almost always lead to a false result, or worse, a decrease in conversion rate because you applied a false winner (or missed on a great variation that could have increased conversion rates).

In Conclusion

This is overall an excellent update from Google, providing more tools and more data for mobile marketers, UA & ASO folks, and marketing leaders to make better decisions for their mobile growth.

The value on the table could be millions of dollars (or more) if you leverage the new way of marketing and growing mobile apps and games. The good news is – if you leaned into the iOS 15 changes last year, you are more than ready to apply your methodologies on the Google Play side.

So tune in for our upcoming guides and articles about each one of these features and how to best maximize your Google Play mobile growth